There are a few ways to block all search engines from indexing your website. One way is to use the robots.txt file. This file tells web crawlers which pages on your website they are allowed to visit and index. You can also use the meta noindex tag. This tag tells search engines not to index a particular page on your website.

How to block all search engines from indexing your site

If you want to hide your site from Google and other search engines, this is the easiest way to do it. It's so easy, you'll be able to set it up in less than 5 minutes!

Get all the details here!

Robots.txt

There are a few reasons why you might want to block search engines from indexing your site. Maybe you don't want the general public to be able to find your site, or maybe you're doing some testing and don't want your site indexed yet. Whatever the reason, it's actually very easy to do.

There are a few different methods, but the easiest is to use the robots.txt file. This is a text file that you create and put at the root of your website. You can then add instructions telling the search engines which pages they can and can't index.

If you're using WordPress, there are plugins that will do this for you. All you have to do is install the plugin, and then add the instructions to the robots.txt file.

If you are just testing your websites, you can run it in the staging environment at the web hosting providers. There are so many web hosting offers staging environment such as:

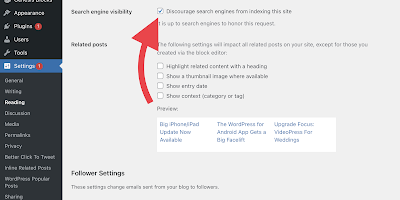

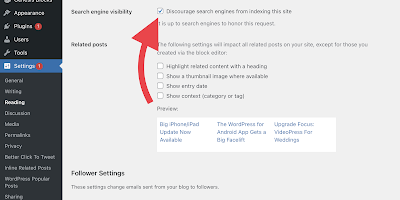

Or With You can block your WordPress sites entirely from search engines in the settings area like in this image:

And Comes back with my main point on how to block search engines index your website with Robots.txt.

I show you how here:

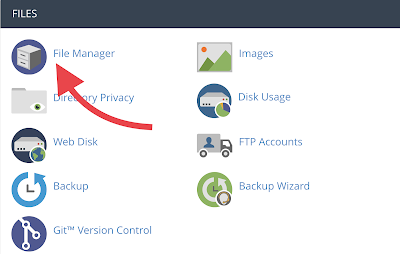

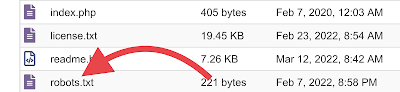

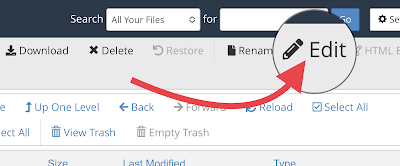

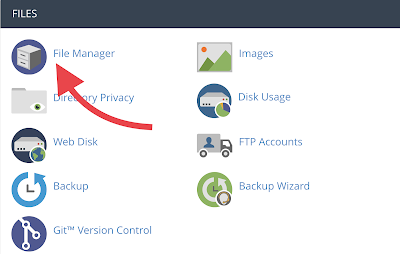

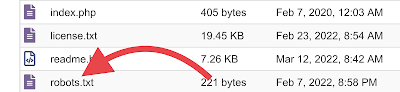

Navigate to your web hosting

Control Panel > next access to your

File Manager > click on

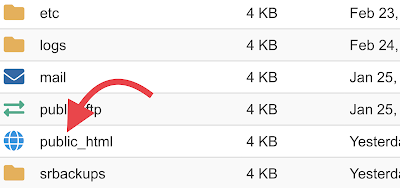

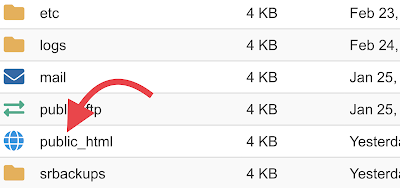

Public_html > open file

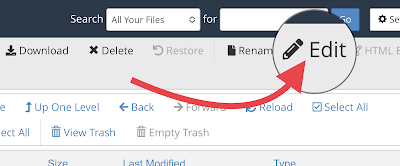

robots.txt to edit it. If there is no robots.txt you can create a file call robots.txt in the Public_html directory.

Next put this into the robots.txt file and save.

User-agent: * Disallow: / This code will not allow search engine index your website.

Beginners, you're reading content published on www.howbeginners.com. Thank you for being here! Our mission is to provide you with valuable information that will help you on your journey to becoming a beginner expert. Whether it's learning a new skill or exploring a new interest, we're here to help. Happy reading!

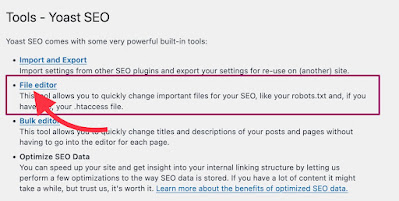

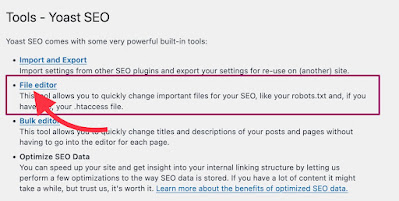

How to config robots.txt with WordPress

You can use Yoast SEO to config Robots.txt and also copy the code above put in the box to block search engines index your website.

Otherwise you can install new other WordPress plugin to config file robots.txt.

How to config robots.txt with Nginx:

put these line into file conf in nginx web server:

location = /robots.txt { add_header Content-Type text/plain; return 200 "User-agent: *\nDisallow: /\n"; Something will look like this

server { listen myport; root /my/www/root; server_name your.website.com ... location = /robots.txt { add_header Content-Type text/plain; return 200 "User-agent: *\nDisallow: /\n"; } ... } Meta Noindex Tag

Meta noindex tag is used to stop all search engines from indexing your website. It is a meta tag that you add to the <head> section of your website's HTML code. When a search engine spider visits your website, it will see the meta noindex tag and not include your website in its search results. This can be useful if you want to keep your website private or if you don't want it to show up in search engine results.

<meta content="noindex" name="robots"> Or you can block only Google bot with this code

<meta content="noindex" name="googlebot"> Block All Bots With CloudFlare WAF Rules

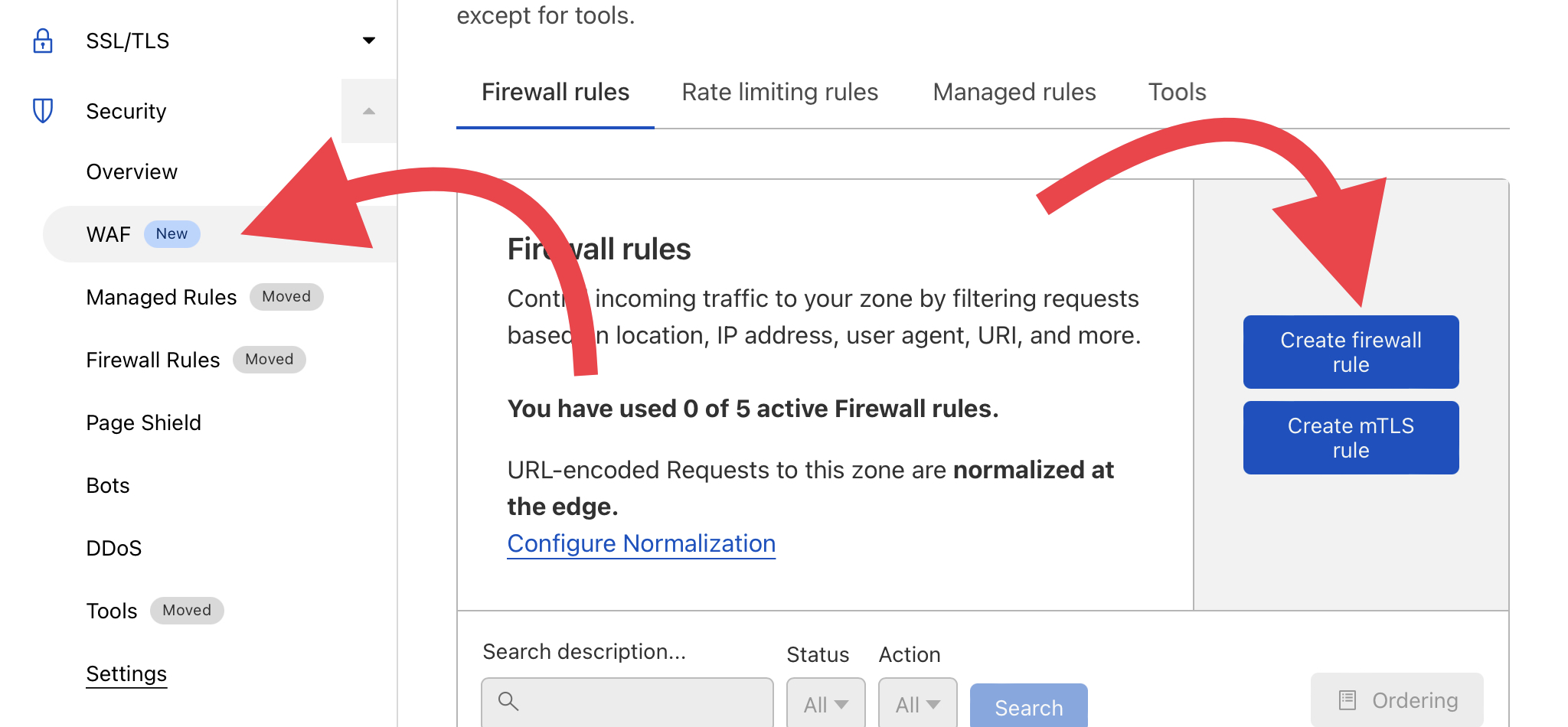

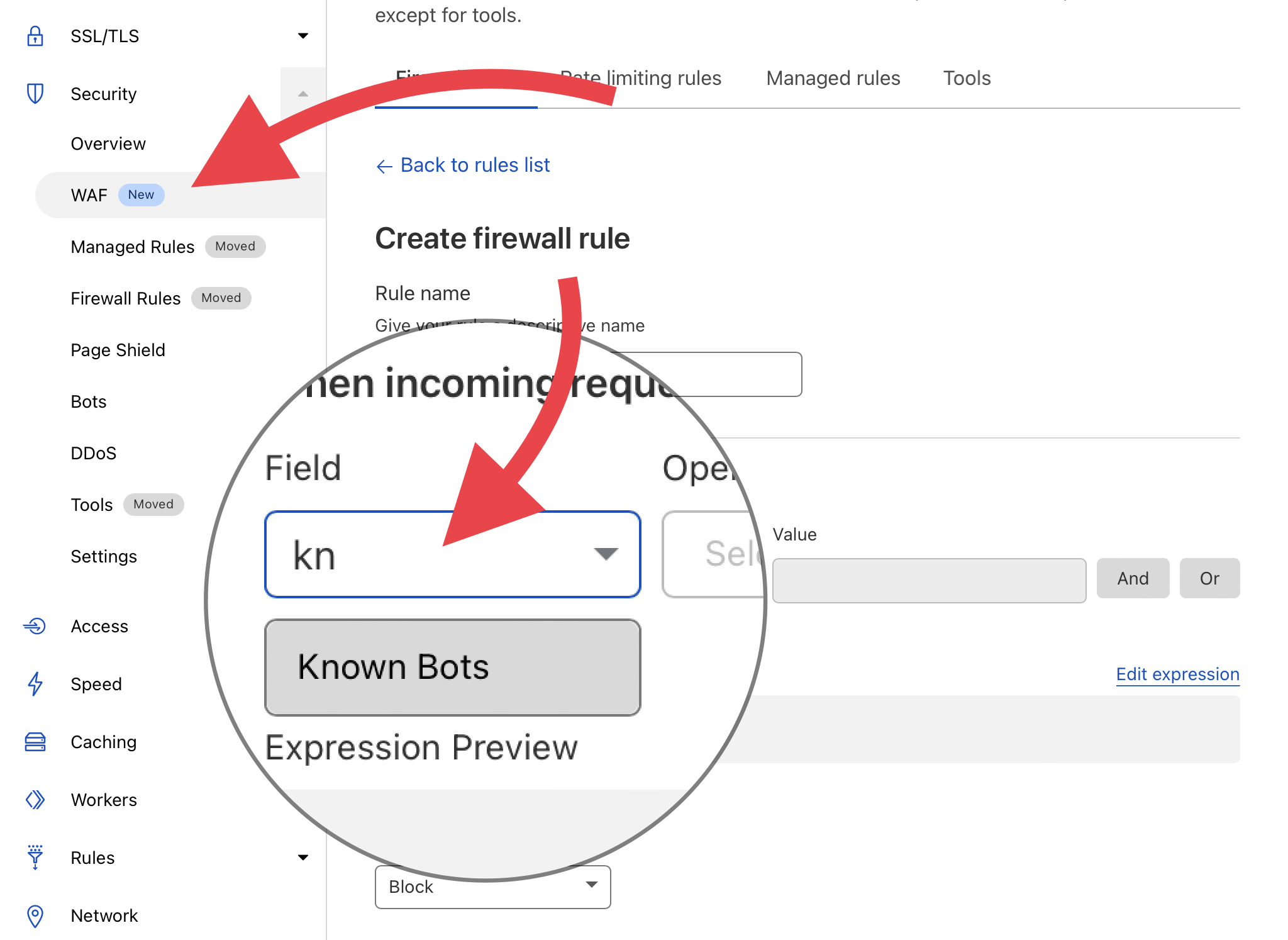

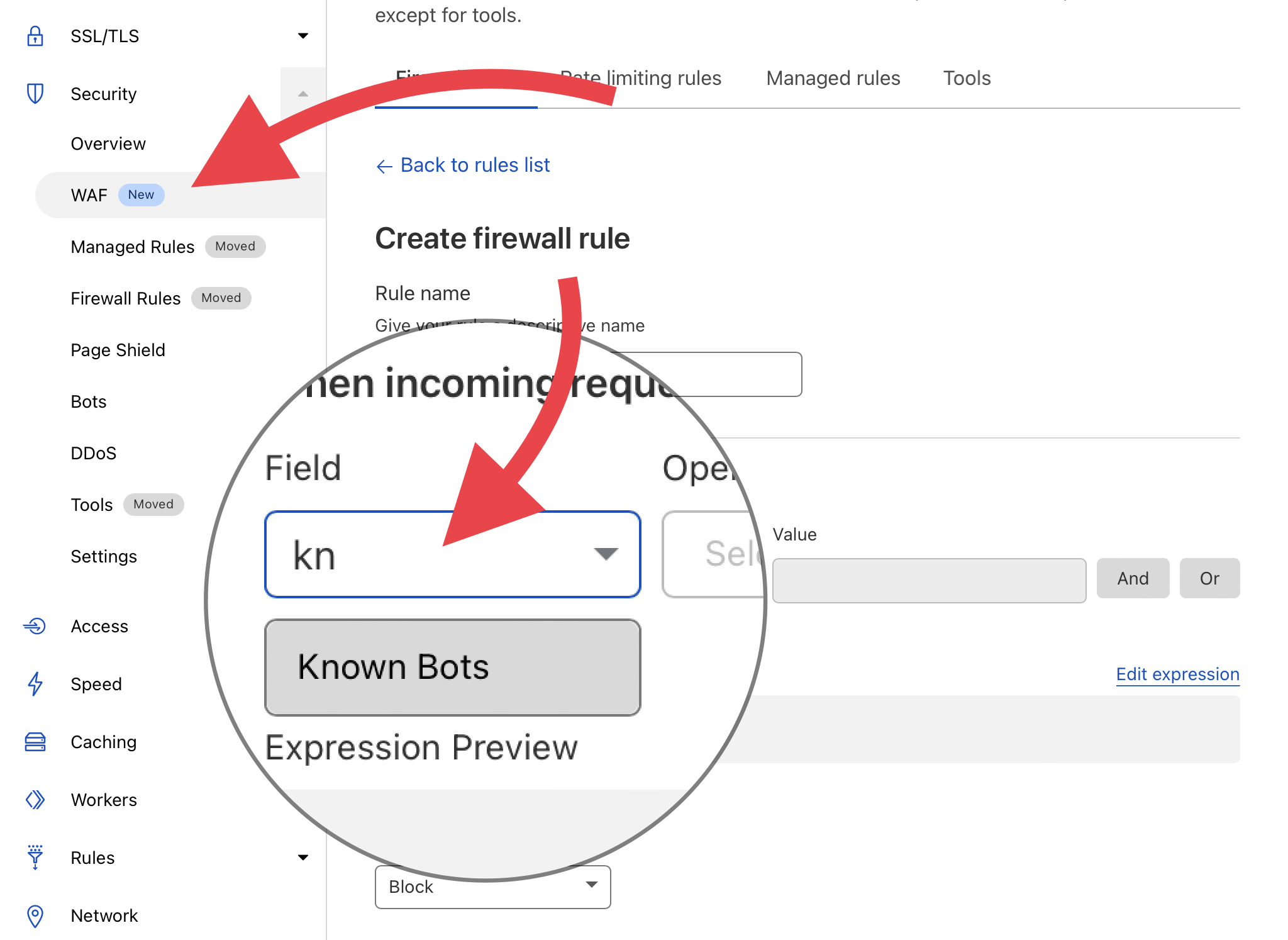

If you have a website that you don't want indexed by search engines, Cloudflare can help. You can create a custom WAF rule to match

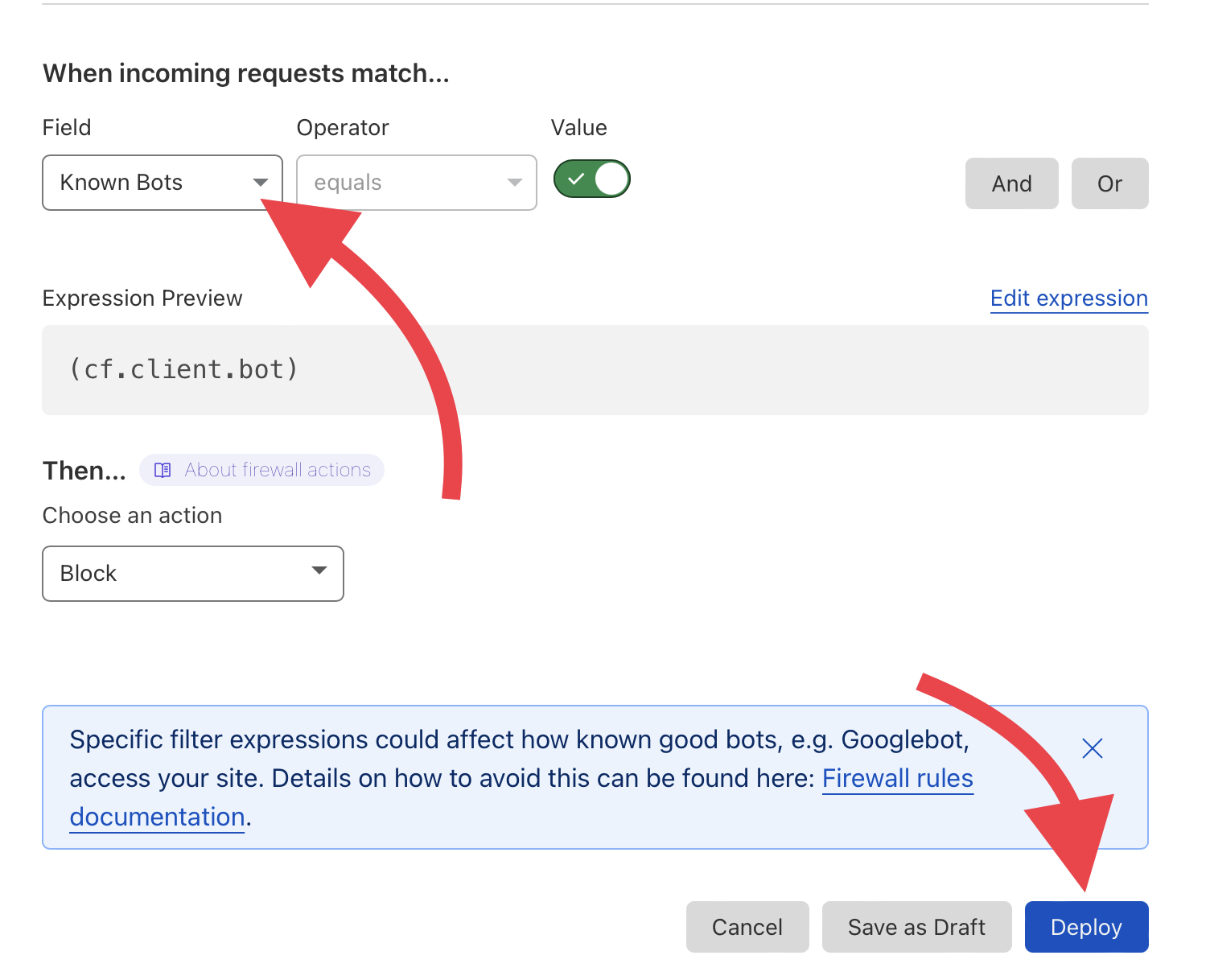

Know Bots and block them from indexing your site. This will help keep your site private and protect your data. This action will block all of bots, search engines no matter what it is.

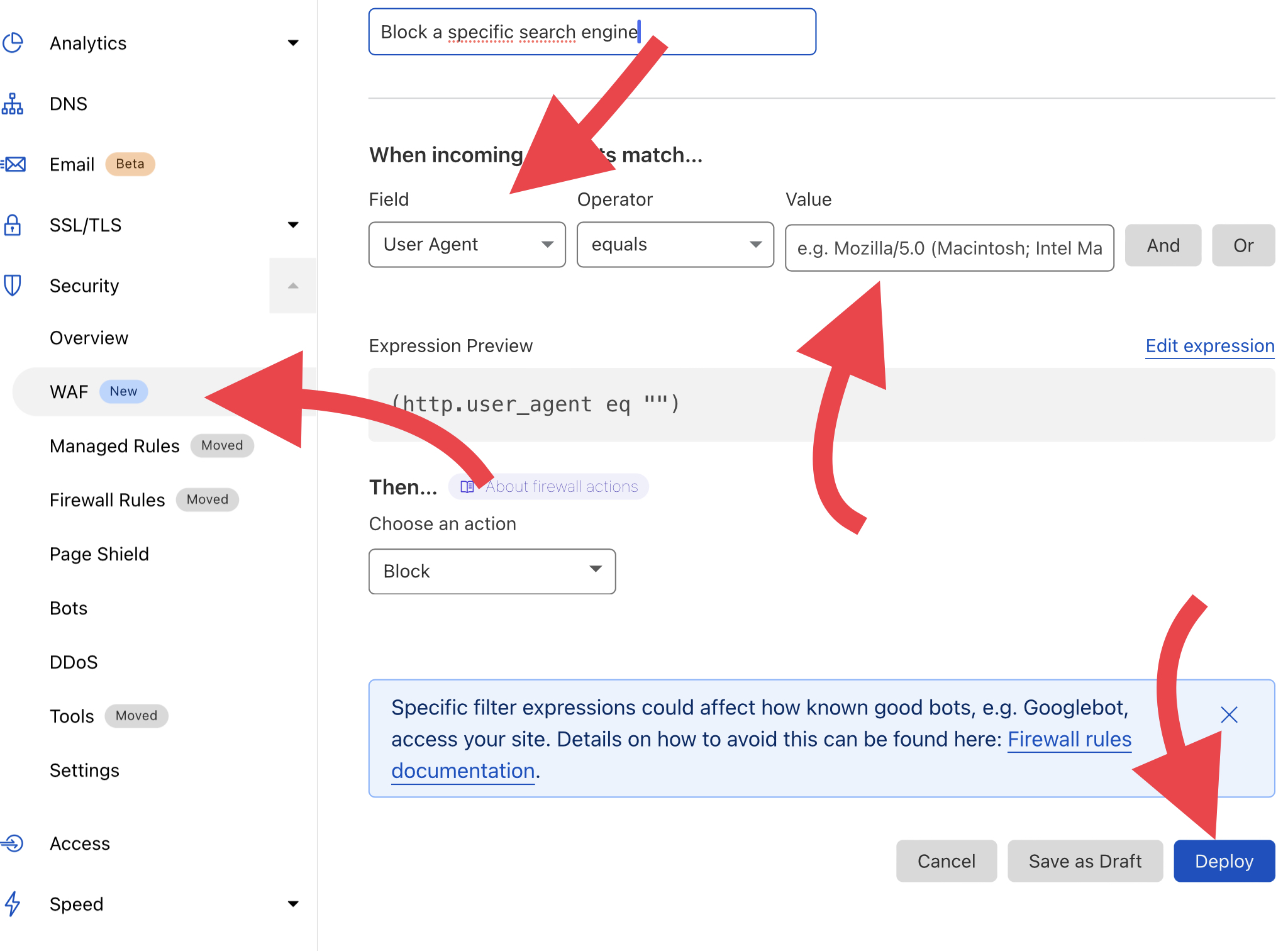

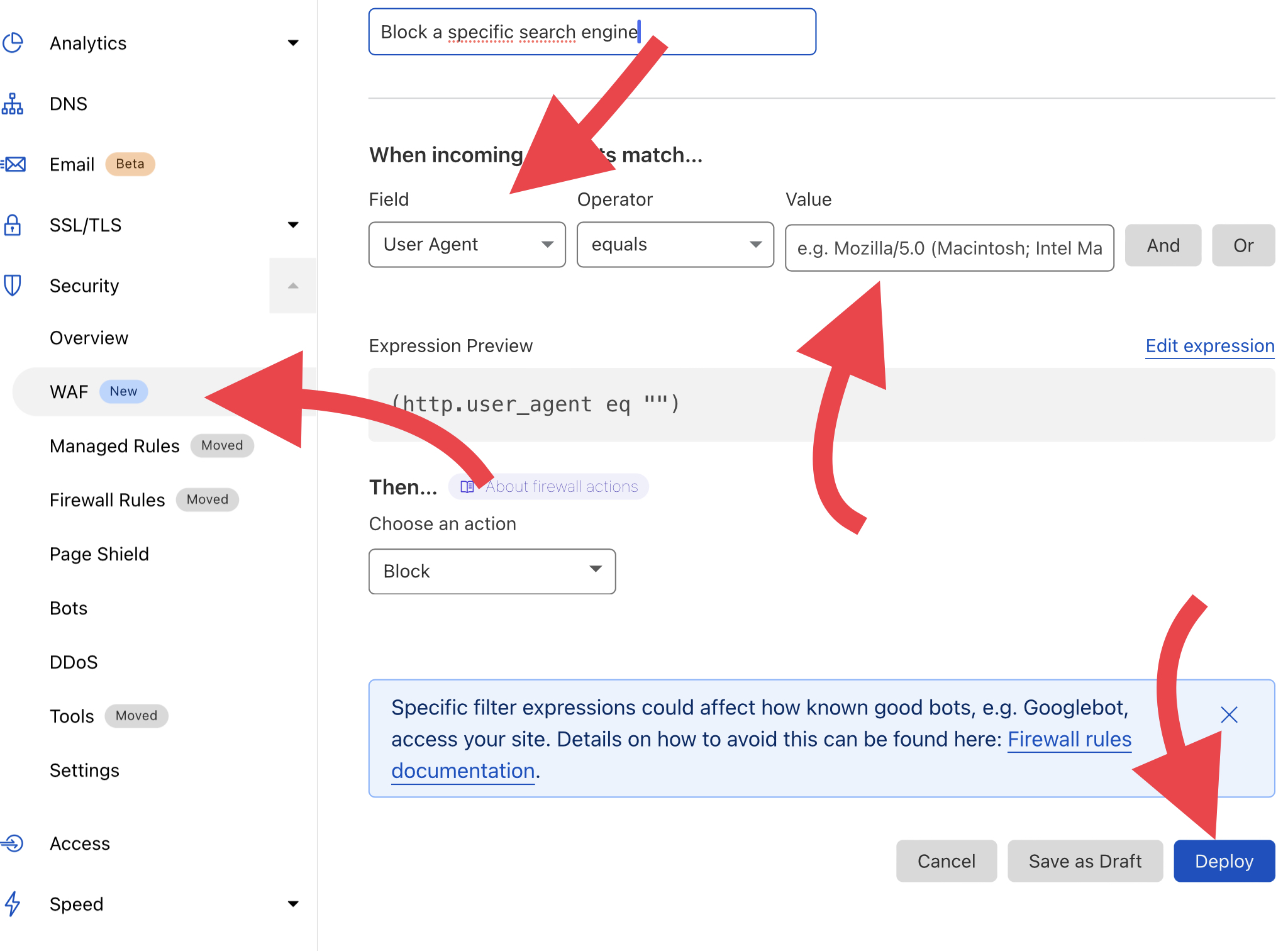

How To Block Bots Access Your Sites With Cloudflare User Agents WAF Rule

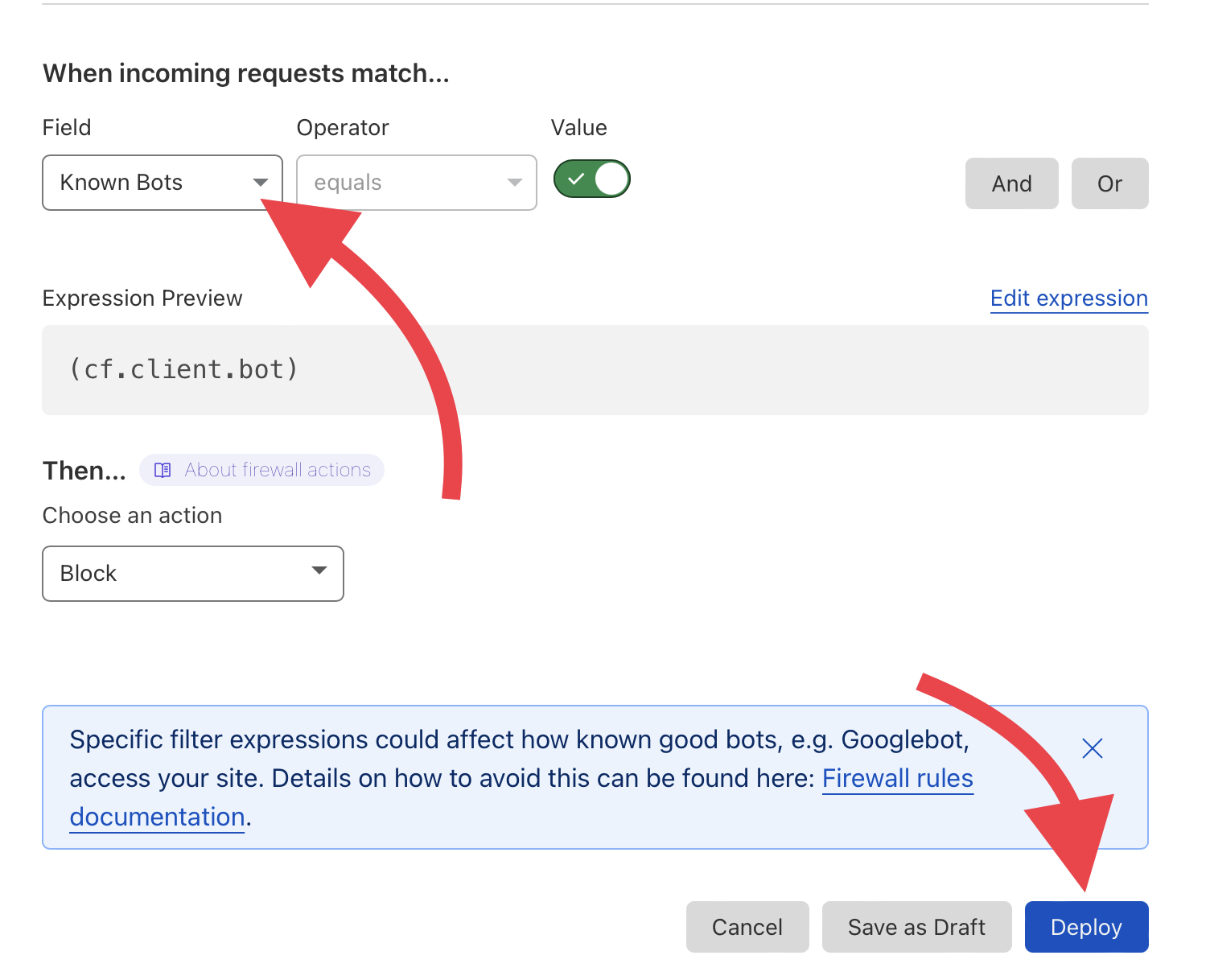

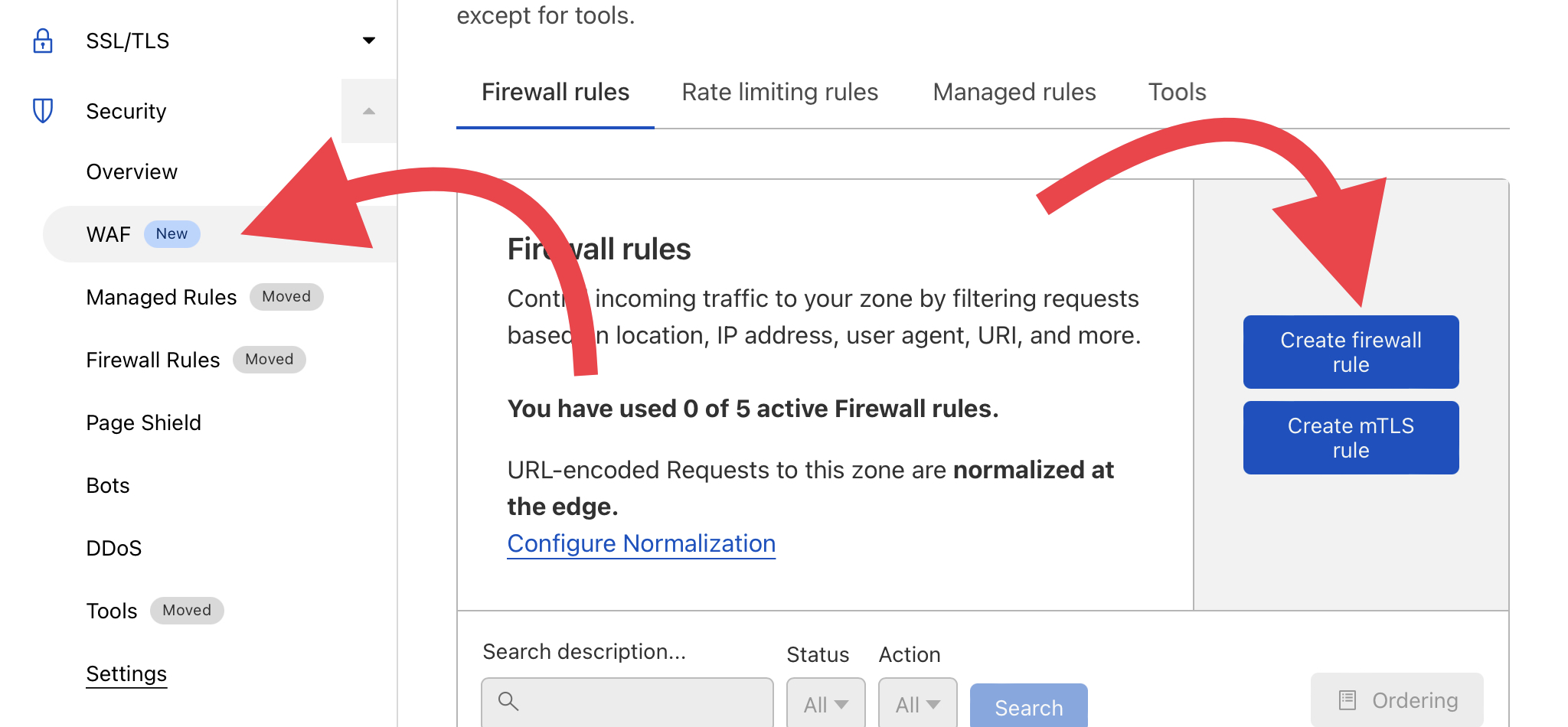

Setting up a Cloudflare WAF rule to block all search engines from indexing your site is a great way to keep your site's content hidden from the public. This can be done by creating a rule that matches the bot user agent string and blocks it. To do this, follow these steps:

1. Log in to your Cloudflare account and go to the WAF dashboard.

2. Click on the "Create Rule" button and give your rule a name.

3. In the "Settings" tab, select "User Agents" from the list of filters.

4. In the "User Agent String" field, paste in the following:

cf.client.botor you can block just only bad bots with this line:BadBot/1.0.2 (+http://bad.bot)

cf.client.bot means block all bots.

BadBot/1.0.2 (+http://bad.bot) this line is just block only bad bots other search engines or bots still see your sites

You can also use WAF rules of CloudFlare to block on specific device access or specific location I mean countries that discussed on next section below. I don’t want to discuss more other types of blocking because it does not a part of the main point I want to talk about in this post.

5. Click on the "Save & Deploy" button and then confirm by clicking on the "Confirm Deployment" button.

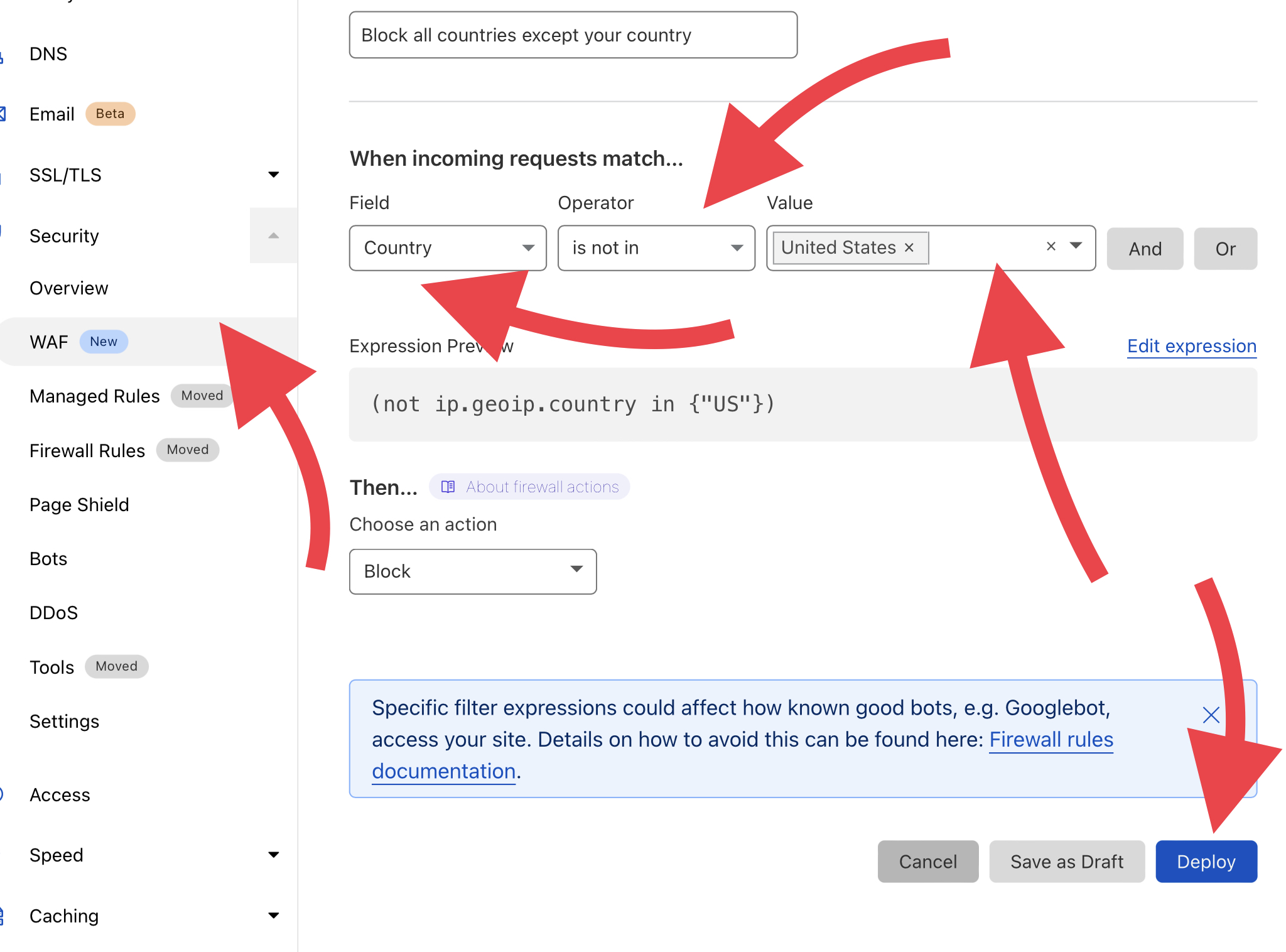

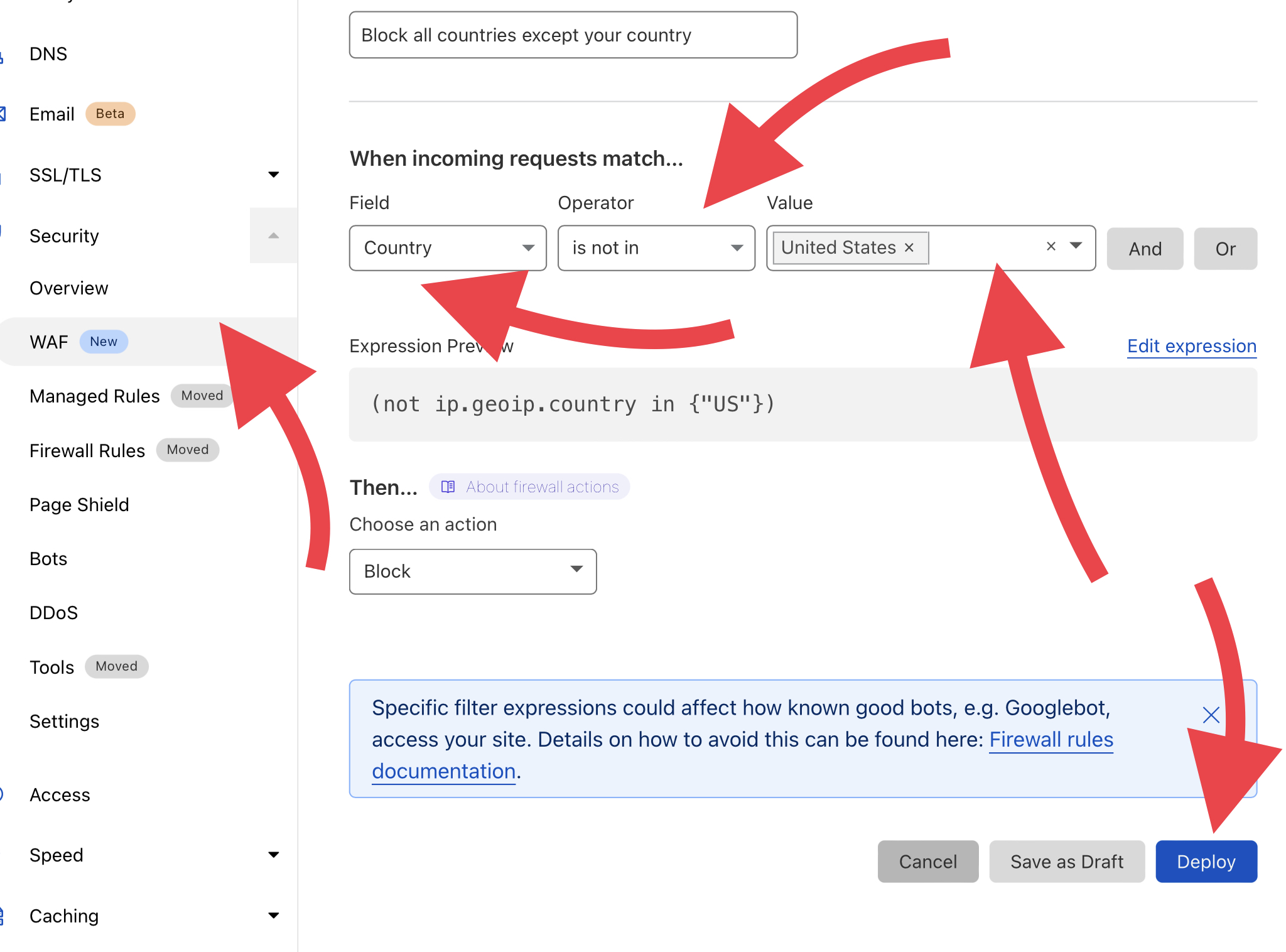

How To Block All Geo Locations Countries Except Your Country Access Your Sites With Cloudflare

Adding a country block to Cloudflare is an easy way to keep your site from being accessed by people in other countries. You can use the Cloudflare dashboard to add specific countries that you would like to block, or use our global blocking tool to quickly and easily block access from any number of countries at once.

If you are using the global blocking tool, be sure to select "Enable WAF Country Blocking" so that Cloudflare's Web Application Firewall (WAF) will help protect your site from malicious traffic.

Keep in mind that by blocking certain countries, you may be inadvertently blocking legitimate traffic from those regions. If you are not sure which countries to block, we recommend starting with those that have a history of abusive behavior or attacks against your site.

Below is an example of block all countries just allow the visitors of The United States access the website. You can switch to your country exception in the value field and select your location.

You can use AND OR to include or exclude from your blocking list. Example: The United State AND Canada allowed to access website.

Note: This type of blocking may not block search engines index your website. It just block end users to access your website. It means users from blocking list can not see any content from your site and search engine or bot still see your sites. You can combine other rules to block on specific search engine or bots access your site if you want.

Conclusion

There a few ways to block search engines from indexing your website. While the first option, using a robots.txt file, is the most common, it may not be the best option for your website. The second option, using a meta tag, is a better solution if you want to block all search engines from indexing your website. However, if you only want to block all bots you can use CloudFlare WAF, is the best way to go.

Thanks For Reading!

Hello everyone and welcome to

How Beginners. We are glad that you have taken the time to visit our website and we appreciate your interest in our content. Our goal is to provide you with beginner-level information that will help you get started in a variety of different areas. Be sure to come back frequently as we are constantly updating our website with new content. Thanks for reading!

Follow me!

Twitter:

@wwwhowbeginnerHashnode:

Follow HereMedium:

Go HereBloglovin:

Read More HereSubscribe RSS Feed

When you subscribe to RSS feeds, you can have the latest headlines, articles or blog posts delivered right to you without having to visit the website directly. This can be really convenient if you're looking to keep up with a website but don't have the time to check it every day. It can also be a great way to find new content from websites that you might not ordinarily visit.

All you need to do is install an RSS feed reader (also called an aggregator) on your computer, browser extensions or mobile device and then subscribe to the feeds of the websites that interest you. The RSS feed reader will automatically check the websites for new content and let you know when something has been added. You can then choose whether or not to read it.

There are lots of different RSS feed readers available, both free and paid. Free one is Feedly.

You can subscribe my RSS Feed link here:

Put this link in to your RSS Feed Reader.

That is all information that I want to share with you for today.

Thanks again and appreciate your time.